Anonymizing User Data: Essential Techniques for Developers

In today’s data-driven world, handling user data responsibly is paramount. With increasing privacy regulations like GDPR and CCPA, developers are on the front lines of protecting sensitive information. One critical approach to achieving this is anonymizing user data. This process transforms data to prevent identification while retaining its utility for tasks like testing, analytics, and development.

Anonymization isn’t just about compliance; it’s a security best practice that minimizes the risk associated with data breaches. By understanding and implementing effective anonymization techniques, developers can build more secure and privacy-respecting applications.

Why Developers Must Master Anonymizing User Data Techniques

Developers often work with copies of production data for testing, debugging, and feature development. Using real, sensitive data in non-production environments creates significant security risks. Unauthorized access to a development database containing live customer details can lead to catastrophic data breaches.

Furthermore, legal frameworks mandate the protection of Personally Identifiable Information (PII). Failure to comply can result in hefty fines and damage to reputation. Anonymization allows developers to work with realistic datasets that mimic production data structures and characteristics without exposing actual user identities. This enables robust testing without compromising privacy.

Core Anonymizing User Data Techniques Explained

Let’s dive into some of the fundamental techniques developers can use to anonymize data:

1. Data Masking:

Data masking, sometimes called data obfuscation, replaces sensitive data elements with realistic but fictional data. The goal is to make the data useless to unauthorized parties while keeping it functional for application logic and testing. Techniques within data masking include:

- Substitution: Replacing original values with random, yet contextually appropriate, values (e.g., replacing a real name with a name from a list of fake names).

- Shuffling/Swapping: Rearranging values within a column across different records (e.g., mixing up email addresses among users). This retains data distribution but breaks the link to individual records.

- Redaction/Nulling Out: Simply removing or replacing sensitive data with a placeholder or null value. This is the simplest but can reduce data utility significantly.

- Encryption: While primarily a security technique, encryption can be used as a form of masking by rendering data unreadable without the decryption key. However, true anonymization aims to make data non-relinkable even if the key is somehow compromised.

- Hashing: Transforming data into a fixed-size string using a one-way function. Often used for passwords, it can also anonymize other data, though collisions (different inputs producing the same hash) are a potential issue. Using a ‘salt’ (random data added before hashing) can improve security.

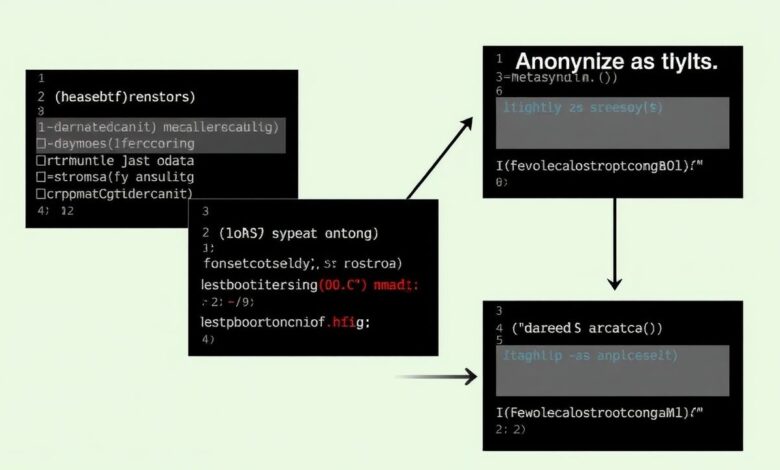

[Hint: Insert image illustrating different data masking techniques like substitution and shuffling]

A key challenge in data masking is maintaining referential integrity and consistency across multiple datasets or even within the same application logic. Masked data must still allow the application to function correctly (e.g., a masked postcode must correspond to a masked city if the application validates addresses).

2. Pseudonymization:

Pseudonymization replaces direct identifiers (like names, IDs) with artificial identifiers (pseudonyms). Unlike full anonymization, it is technically reversible, but only with the aid of additional information (a key or mapping table) that is kept separate and secure. GDPR explicitly mentions pseudonymization as a recommended technique, as it offers a layer of privacy while still allowing for potential re-identification under controlled circumstances if necessary.

3. Generalization/Aggregation:

This involves making data less specific. Instead of recording an exact age, data might be grouped into age ranges (e.g., 25-34). Similarly, precise locations might be generalized to a city or region. Aggregation involves summarizing data for groups rather than individuals (e.g., average salary per department instead of individual salaries). This technique helps prevent individuals from being identified within a dataset.

4. Data Perturbation:

Adding random noise to the data slightly alters the original values. This makes it difficult to determine the exact original value for any single record but preserves the overall statistical properties of the dataset. Differential privacy is a formal framework built around this concept, aiming to allow queries over a dataset while providing strong guarantees that the presence or absence of any single individual’s data does not significantly affect the outcome.

5. Synthetic Data Generation:

This advanced technique involves creating entirely new datasets that statistically mimic the original sensitive data without using any of the original records. Synthetic data can be generated based on patterns and distributions learned from the real data. This offers a high level of privacy protection but requires sophisticated techniques to ensure the synthetic data accurately reflects the characteristics of the real data.

Implementing Anonymization: Best Practices for Developers

- Understand the Data: Identify all sensitive data points and how they are related. Mapping data flows is crucial.

- Choose the Right Technique: The best approach depends on the data type, use case (testing, analytics, etc.), and required level of privacy vs. data utility.

- Maintain Consistency: For techniques like masking and pseudonymization, ensure that the same original value is consistently transformed into the same masked/pseudonymized value across different datasets and systems where necessary.

- Use Tools and Automation: Manually anonymizing large datasets is impractical and error-prone. Utilize specialized data anonymization tools or libraries. Modern approaches often leverage AI and automation to streamline complex processes, enhancing privacy while saving time and resources.

- Regularly Review and Validate: Anonymization processes should be regularly reviewed to ensure they remain effective as data structures or requirements change. Validate that the anonymized data is still fit for its intended purpose.

- Consider the Re-identification Risk: Even anonymized data can potentially be re-identified, especially if combined with external data sources (known as linkage attacks). Techniques like k-anonymity (ensuring each record is indistinguishable from at least k-1 other records) and l-diversity/t-closeness (addressing homogeneity and proximity within indistinguishable groups) are measures to assess and mitigate this risk.

For developers, integrating anonymization into the CI/CD pipeline is an excellent practice, ensuring that test environments are automatically populated with safe, anonymized data.

Anonymization and Compliance

Regulations like GDPR and CCPA emphasize data minimization and purpose limitation. Anonymizing data aligns perfectly with these principles by limiting the exposure of identifiable information. Understanding your obligations under relevant regulations is the first step. For an introduction to GDPR from a developer’s perspective, you might find this article helpful: Privacy by Design: Writing Code That Respects User Data.

Ensuring that the chosen anonymization technique meets legal standards for ‘anonymization’ (rendering data irreversibly non-identifiable) versus ‘pseudonymization’ (potentially reversible) is critical for compliance.

Conclusion

Anonymizing user data is a vital skill set for modern developers. It’s a cornerstone of building privacy-respecting applications, complying with regulations, and enabling secure development and testing workflows. By mastering techniques like masking, pseudonymization, generalization, perturbation, and synthetic data generation, developers can significantly enhance the security posture of their applications and protect user privacy effectively.

Start exploring these techniques and integrate them into your development lifecycle today. Protecting user data is not just a legal requirement; it’s an ethical one.

For more detailed guidance on specific regulations, consult official resources like the official GDPR information portal.